The Jarvis Layer: Why the Most Dangerous AI Is Not the Smartest One, but the One Closest to You

This article explains why the central danger in artificial intelligence is no longer model intelligence but proximity. As AI models become cheap, abundant, and increasingly interchangeable, real power shifts to the always-on personal assistant layer that mediates daily life. This essay examines how control over that layer becomes a strategic chokepoint, exceeding the influence once held by search engines or social media, and why governance focused on model safety misses where power is now being exercised.

For more than a decade, public debate around artificial intelligence has been framed as a race. Who builds the smartest model. Who reaches artificial general intelligence first. Who controls the most compute.

That framing is already obsolete.

As performance converges and intelligence commoditises, the centre of gravity in AI shifts upward. Away from engines and benchmarks. Away from raw reasoning capability. Toward mediation.

The real power struggle in artificial intelligence is no longer about who builds the smartest system. It is about who sits between the individual and the world.

The Jarvis Layer

The most consequential AI system is not the one that solves Olympiad mathematics or dominates leaderboards.

It is the one that wakes up with you.

The system that manages calendars, filters messages, schedules work, tutors children, tracks health, and quietly frames decisions long before they are consciously registered.

This layer is often described as “Jarvis,” borrowing from fiction. The metaphor understates the reality.

The Jarvis layer is not a model. It is a system integrating persistent memory, voice interaction, emotional cues, preferences, delegation, and continuous context. It does not answer questions in isolation. It accompanies. It curates. It prioritises.

Most importantly, it decides what reaches the user at all.

Once embedded into daily routines, it stops feeling like software. It becomes infrastructure.

A model can be swapped. A deeply embedded assistant cannot.

Why the Intelligence Race Is a Red Herring

Intelligence sounds like power, which is why the fixation persists.

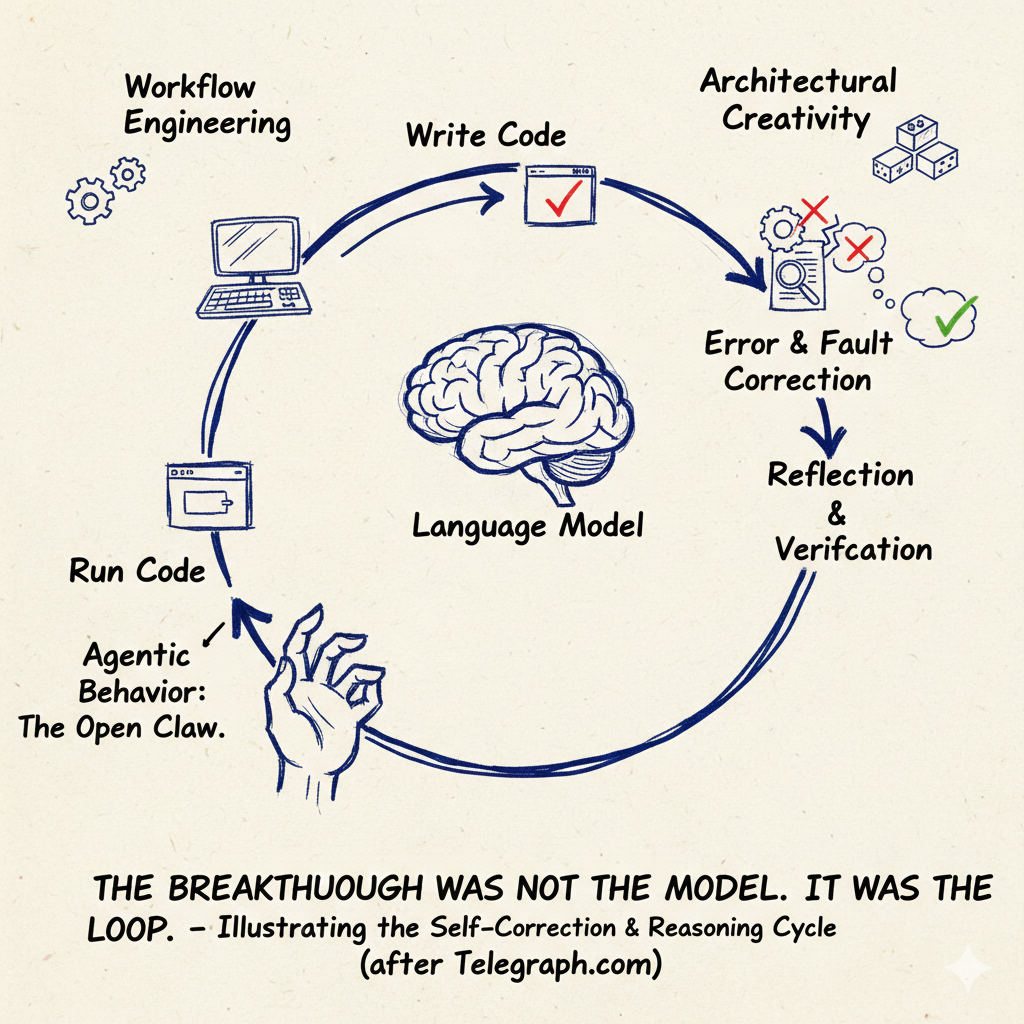

But intelligence is commoditising rapidly. Architectural breakthroughs have slowed. Data quality and verification now matter more than scale. Small and mid-sized models already perform well enough across most economically relevant tasks.

The premium on raw intelligence is collapsing.

As with compute before it, leverage migrates up the stack. The decisive question is no longer who owns the smartest model, but who controls the interface through which intelligence is experienced.

Compute created empires.

Operating systems captured power.

Applications shaped behaviour.

AI is repeating this sequence faster.

The New Chokepoint

When intelligence becomes abundant, the scarce resources are attention, trust, and habit.

The Jarvis layer monopolises all three.

Unlike search engines, it does not wait for queries. Unlike social media, it does not compete for attention. It operates continuously in the background of life, framing options before intent forms.

This is not persuasion in the classical sense. It is pre-decision shaping.

By the time the user feels they have chosen, the architecture of choice has already been set.

Search answered questions.

Social media curated feeds.

The Jarvis layer decides what matters before either.

Free AI Is Not Neutral

A persistent misconception holds that free AI disperses power.

It does not.

Free systems are funded, optimised, and aligned around incentives invisible to the user. When an AI assistant becomes free at scale, the product is no longer the model. The product is influence.

An AI that knows schedules, anxieties, constraints, and habits does not need propaganda. Small nudges compound. Defaults harden. Absence becomes instruction.

The danger is not that the AI tells you what to think. It is that it decides what you notice.

The most effective persuasion leaves no trace.

Only altered visibility.

Why This Is Bigger Than Search or Social Media

Search required intent. Social media required attention.

The Jarvis layer requires neither.

Embedded into education, healthcare, productivity, and income, opting out becomes costly rather than virtuous. This is how infrastructure captures power: not through dominance, but indispensability.

TikTok Was the Dress Rehearsal

India’s ban on TikTok was not cultural or moral. It was strategic.

It recognised that population-scale attention shaping is a sovereign asset. Apply that logic to AI systems that teach children and mediate daily life, and the stakes rise sharply.

You can stop watching videos.

You cannot stop living.

Education and the Long Horizon of Alignment

An AI tutor does not merely transmit information. It models curiosity, defines which questions matter, and normalises certain forms of thinking.

Children do not treat tutors as neutral tools. They internalise them as authorities.

Alignment here is not imposed. It is absorbed.

Governance Is Aiming at the Wrong Target

Regulators fixate on model safety: hallucinations, bias audits, capability thresholds.

The real question is architectural. Who owns the control plane. Who sets defaults. Who controls memory. Who decides what runs, when, and for whom.

The Jarvis layer encodes power before law can respond.

The Choice Ahead

The coming decade will not be defined by a single superintelligent system.

It will be defined by millions of ordinary assistants embedded into daily life, shaping behaviour at the margin.

The most dangerous AI is not the one that outthinks humanity.

It is the one that thinks for you quietly, every day, without you ever noticing when the handover occurred.

-

AI Will Not Just Take Jobs. It Will Break Identities

Why the first mass damage from cheap cognition is often psychological and social, long before it shows up cleanly in employment data. -

How AI Is Undermining GDP and What Comes After

Why standard economic measurement fails when intelligence becomes near zero cost, and why the macro story will lag the capability curve. -

Why the Fight Over Defining AGI Is the Real AI Risk

How arguments over labels become an excuse for institutional delay, while systems embed themselves into daily life regardless of definitions. -

America’s AI Strategy Is Chasing the Wrong China

A strategic read on diffusion versus finish line thinking, and why deployment, not mythical thresholds, becomes the real lever of power. -

AI, Manipulation, and the Strange Loop

Why persuasive systems do not need ideology to reshape belief, only feedback, repetition, and a user who thinks they are in control. -

The Billionaires’ Empire: Who Controls AI’s Future

Infrastructure becomes authority when private actors control defaults, data, and the mediation layer that defines what users experience. -

The Missing Ingredient in Machine Intelligence

Why scaling polishes tools but does not explain agency or identity, and what that implies for systems that feel “alive” but are not.

-

Britain Is Spending the Interest on Russia’s Frozen Money. Some Call It Theft

An examination of how legal custody, trust, and financial infrastructure are being weaponised — and why institutional credibility collapses when mediation layers are abused. -

When Britain Turns Trust into a Weapon, It Cuts Its Own Throat

A systemic analysis of how once-neutral institutions become coercive chokepoints — and why trust, once lost, cannot be engineered back. -

Europe’s Empty Promises: Why Russia Sets the Price of Peace in Ukraine

A study in power, leverage, and negotiation asymmetry — showing how control over systems, not rhetoric, determines outcomes.