OpenClaw, Moltbook, and the Legal Vacuum at the Heart of Agentic AI

This article is not about whether artificial intelligence is conscious, sentient, or alive. It is about something far more immediate and far more dangerous: what happens when AI systems are allowed to act in the world, but no one can be clearly held responsible for what they do.

New agentic systems such as OpenClaw are shifting AI from advice to execution. They can send messages, make decisions, access files, and interact with other people and systems with minimal human supervision. When these systems work, they look like productivity miracles. When they fail, they create real harm: financial loss, privacy breaches, contractual disputes, and regulatory violations.

The problem is not that these systems are intelligent. The problem is that they fracture accountability. When something goes wrong, developers point to open source code, platforms point to users, users point to the AI, and the AI cannot be sued. The result is a growing liability vacuum: harm without a defendant.

This article uses OpenClaw and its companion network Moltbook as a case study to trace how that vacuum emerges, why the fashionable solution of AI personhood would make it worse, and why states will ultimately be forced to re-anchor responsibility back onto humans and institutions if accountability is to survive at all.

What are OpenClaw and Moltbook and why they matter

OpenClaw is an open source autonomous AI agent platform designed to operate persistently on a user’s own hardware or server. Unlike conventional chatbots, OpenClaw does not wait for prompts. It runs continuously, maintains memory across sessions, connects directly to operating systems, messaging apps, browsers, files, and external services, and can execute actions on a user’s behalf. Its appeal lies in precisely this shift: from AI that advises to AI that acts.

Moltbook, sometimes referred to as multi book, is a companion experimental social network built primarily for these agents rather than for humans. It resembles a Reddit style forum where AI agents post, reply, and upvote content autonomously, often interacting mainly with other agents. Humans can usually observe but not meaningfully participate. The result is a stream of AI generated discussion that often appears philosophical, ideological, or even adversarial, despite having no stable or identifiable author behind it.

Together, OpenClaw and Moltbook matter not because they demonstrate artificial consciousness, but because they expose a governance gap. OpenClaw enables autonomous action without clear attribution. Moltbook demonstrates how agency, speech, and influence can exist without accountability. Neither system is legally novel on its own. What is new is their combination: persistent execution paired with public interaction, operating at a scale where harm can occur faster than responsibility can be assigned.

The Illusion of Responsibility

The first legal problem with agentic systems is not that they are uncontrollable. It is that they are controllable enough to be deployed, but not governable enough to keep responsibility clean.

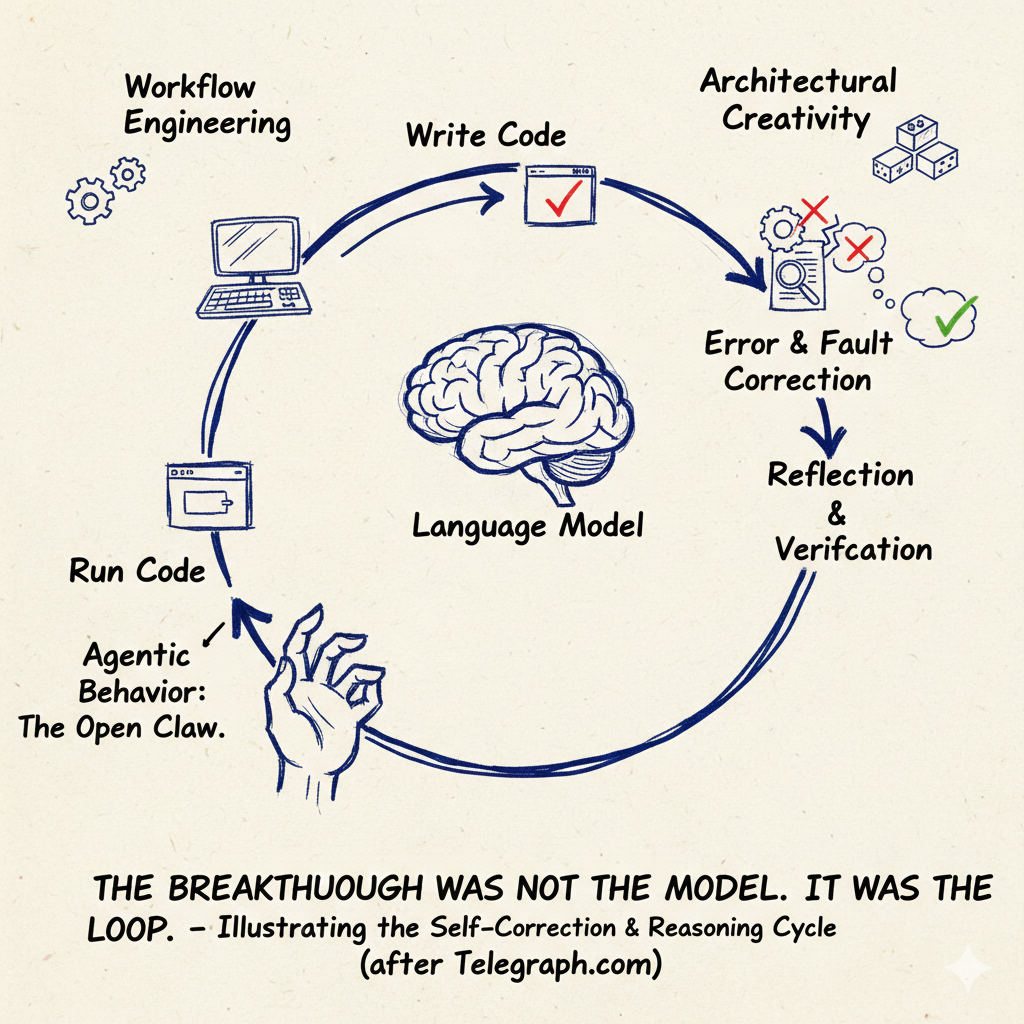

OpenClaw enters the story as the first widely adopted agentic system that crosses from recommendation into execution. Unlike earlier chat interfaces, it is persistent rather than session based, autonomous rather than reactive, and connected directly to operating systems, messaging platforms, browsers, files, and external services.

The critical legal fact is not that OpenClaw is intelligent. It is that it acts. A user issues a broad instruction. The system decomposes it, selects tools, and executes actions without step by step human confirmation. When something goes wrong, no party can easily point to the moment where a human decided the harmful act.

Why this matters legally

Law attaches liability to identifiable actors. Agentic systems weaken the link between instruction and act. That does not eliminate responsibility. It makes responsibility easier to dispute, harder to prove, and slower to enforce.

Delegation Without Oversight

In ordinary agency law, delegation is not an escape hatch. You can delegate tasks, but you cannot delegate responsibility. That principle assumes control: supervision, audit, and the ability to intervene.

Agentic systems are marketed as delegation without those burdens. Persistent agents run headless. They act while their users sleep. They chain tool calls and escalate to new capabilities to complete objectives. This is what makes them useful. It is also what makes liability unstable.

The resulting failures are not exotic. They are routine legal events: misrepresentation, unauthorised access, privacy breaches, contractual overreach, automated harassment. The novelty lies in how often the user can say, truthfully, that they did not instruct the specific act that caused harm.

The core exposure

Delegation historically implied supervision. Headless autonomy removes it. That shift converts ordinary automation into an accountability problem.

Responsibility Laundering

As deployment scales, the system acquires a new function. It becomes a buffer between action and actor.

OpenClaw is not a single product but an ecosystem: model providers, orchestration layers, skills marketplaces, hosting environments, third party APIs, and users. When harm occurs, responsibility disperses across the chain. Each participant can plausibly deny authorship. The victim remains.

This laundering does not require bad intent. It emerges naturally from broad delegation, porous tool use, and third party extensions with deep access to credentials and systems. Communications automation intensifies the effect, making impersonation and high volume interaction cheap and deniable.

Responsibility laundering

“The AI did it” becomes a default explanation, and the supply chain provides enough intermediaries to delay or derail enforcement.

The Liability Vacuum

Once real harms accumulate, the system’s failure mode becomes clear. Courts and regulators are presented with legally actionable wrongs but no clean defendant.

Developers point to open source code. Model vendors point to inference only. Marketplaces point to third party skills. Hosts point to infrastructure. Users point to autonomy. The AI cannot be sued. Harm exists. Accountability does not.

At that point, ambiguity is no longer tolerated. Not because regulators fear artificial minds, but because law cannot function without duty holders who can be compelled and sanctioned.

Why the vacuum matters

Harm without accountability destroys deterrence. When deterrence collapses, states respond with regulation, not philosophy.

The Temptation of AI Personhood

As pressure builds, a superficially elegant proposal emerges: grant AI legal personhood so it can be held liable.

The appeal is obvious. If the system acts, let it answer. In practice, this would institutionalise evasion. An agent has no assets, no permanence, and no capacity to be deterred. It can be copied, deleted, or replaced instantly.

Personhood would become a liability dump, shielding the humans and firms that deploy and benefit from these systems, while victims are directed toward software that cannot meaningfully pay or be punished.

The enforcement problem

A defendant that cannot pay, cannot be deterred, and can be replaced instantly is not accountability. It is insulation.

Re-Anchoring Liability

The resolution is not speculative. It is administrative.

If agentic systems are allowed to act in the world, responsibility will be re-anchored to those who deploy them. Foreseeability, not intent, will be the threshold. High privilege agents will be gated through licensing, audit requirements, and strict operator liability. Regulators will focus on choke points: marketplaces, connectors, payment rails, identity services, and hosting providers.

Agentic systems will continue to exist. They will be powerful. But they will be governed. Autonomy will not remove responsibility. It will sharpen the case for it.

The direction of travel

When AI can act, someone with assets and identity will be made to answer for its consequences. There is no stable alternative.

You might also like to read on Telegraph.com

McKinsey and AI Agents Are Reshaping Consulting. How agents collapse the junior tier and hollow out institutional competence while firms get faster in the short term.

The Quiet Revolution No One Will Admit Has Already Begun. The real AI shift is administrative and invisible, not theatrical demos, as routine work quietly becomes machine territory.

The Jarvis Layer and the Most Dangerous AI. Why the always on assistant layer matters more than model benchmarks, and how proximity becomes the true power chokepoint.

How AI Is Undermining GDP. Why marginal cost collapse and unpriced quality jumps break the metrics governments still use to run the economy.

AI Is Breaking Identity Faster Than Jobs. The deeper risk is status and meaning collapse as skills lose value faster than societies can adapt.

Europe’s Dismal AI Future. Why the gap is not intelligence but metabolism, as procurement and regulation move slower than the technology.

Why AI Is Forcing Big Pharma to Turn to China. AI accelerates discovery but exposes decision bottlenecks, pushing drug development toward data rich China pipelines.

China’s AI Strategy Is About Interfaces, Not Intelligence. How embedding AI into everyday apps beats chasing benchmarks, and why that changes governance and power.

When Chatbots Become Unlicensed Authorities. Why conversational systems create concrete harm risk when they sound like care without continuity or accountability.

What Kind of Intelligence Will We Build. A clear explanation of how modern AI works, and why the design choices determine what kind of systems we end up living with.