The Quiet AI Revolution No One Noticed Until It Was Everywhere

The most important technological shifts rarely arrive with announcements. They do not declare themselves as moments. They seep into daily routines, settle into institutions, and only later are described—retroactively—as “revolutions.” By the time there is agreement that something fundamental has changed, the change has already hardened into infrastructure.

This is the right way to understand what is happening with artificial intelligence. Not as a single breakthrough or a dramatic milestone, but as a quiet re-ordering of how work is coordinated, decisions are prepared, and knowledge is processed. The transition is already underway. What remains unsettled is how quickly institutions are willing to admit it.

There is a simple rule that explains much of the confusion: when a system works reliably, society stops noticing it.

Infrastructure becomes visible mainly when it fails. Until then, it fades into the background. This is why major transitions are often recognised late, debated awkwardly, and narrated afterwards as if they were obvious.

Why revolutions don’t feel like revolutions

Electricity did not arrive as a single event. Neither did running water, mass education, or the internet. These systems spread unevenly, encountered resistance, and only gradually became indispensable. The turning point was not a ceremony. It was the moment daily life quietly reorganised itself around them.

Artificial intelligence is following the same pattern. The most consequential uses are no longer theatrical demonstrations. They are ordinary, repetitive, and administrative: drafting documents, summarising meetings, classifying records, checking compliance, forecasting outcomes. The more dependable these systems become, the less visible they appear.

The threshold problem

Societies tend to argue about thresholds after they have crossed them. When new capabilities emerge, attention turns to definitions: what counts, who decides, and whether the line has truly been passed. This is understandable, but it often misses the practical signal.

In reality, the threshold is crossed when behaviour changes. When organisations redesign workflows around a tool—when routines assume its presence—the transition has already occurred, regardless of whether language has caught up.

AI as coordination infrastructure

The most important impact of AI is not that it “thinks.” It is that it reduces the cost of coordination. Tasks that once required time, expertise, and layers of review can now be prepared cheaply and at scale. The bottleneck shifts from analysis to approval, from production to accountability.

This is already visible in the public sector. Governments are quietly deploying AI systems for fraud detection, welfare routing, and high-volume case processing. These tools are rarely described as revolutionary. They are framed as efficiency measures. But the effect is structural: decisions are triaged differently, workloads are redistributed, and bureaucratic routines are rewritten around machine-assisted judgment.

Why institutions still appear slow

Institutions are not ignorant of these changes. They are constrained by liability, regulation, procurement rules, and political risk. Adoption therefore proceeds unevenly: quietly inside organisations, cautiously in formal policy, and noisily in public debate.

Physical bottlenecks reinforce this impression of delay. Energy demand, data-centre capacity, and compute availability are now visible constraints. But these are not signs of reversal. They are signs of scale. Infrastructure strain appears not when a technology is failing, but when it is being used seriously.

The plateau argument—and why it misleads

A common counter-argument holds that AI has entered a plateau: improvements are incremental, errors persist, and many projects struggle to demonstrate immediate return on investment. This critique is not wrong, but it is incomplete.

Infrastructure does not announce itself through perfection. It announces itself through dependence. Hallucinations, bottlenecks, and uneven performance do not signal failure; they signal integration. Railways were dangerous before they were safe. Electricity was unreliable before it was trusted. The same pattern applies here.

The moment it becomes undeniable

Infrastructure becomes visible when it breaks. For AI, this moment may arrive through systemic error, large-scale fraud, administrative failure, or sudden labour displacement that overwhelms existing systems. Only then will dependence be widely acknowledged.

At that point, the debate will shift decisively. The question will no longer be whether the transition has happened, but how it should be governed. History will tidy the narrative, assign dates, and claim foresight.

The record will show something simpler. The change arrived quietly. It was visible in routines, not announcements. And it was mistaken for the absence of revolution precisely because it worked.

From Discovery to Simulation: the quiet revolution remaking medicine

The same pattern now reshaping artificial intelligence is already visible in medicine. Not as a single breakthrough, not as a miracle cure, but as a structural shift in how biological knowledge is produced. The centre of gravity is moving away from discovery by trial-and-error and toward simulation at scale.

For most of modern medical history, progress depended on slow, linear processes: laboratory experiments, animal models, small clinical trials, and incremental validation. Biology was treated as something to be probed cautiously because it could not be modelled reliably. That constraint is now weakening.

Advances in computation and modelling have turned large parts of biology into a solvable systems problem. Proteins can be folded in silico. Drug candidates can be tested against millions of simulated interactions before a single compound is synthesised. Entire disease pathways can be explored computationally in days rather than years.

This is why cures increasingly appear to “arrive suddenly.” The work has often been underway for years, but the decisive phase now happens inside simulation. Once a model converges, translation into the physical world feels abrupt—almost discontinuous—despite being the product of long accumulation.

The bottleneck, however, has not disappeared. It has moved. As with artificial intelligence more broadly, the limiting factor is no longer capability but validation. Regulation, safety testing, approval pipelines, and institutional caution now determine the speed at which simulated breakthroughs reach patients.

This explains the growing tension in healthcare systems: scientific capability accelerates while regulatory and ethical frameworks remain calibrated for an earlier era. The result is not recklessness, but delay—and a widening gap between what is technically possible and what is permitted.

As this shift deepens, medicine will look less like a sequence of isolated discoveries and more like an engineering discipline: model first, test selectively, deploy cautiously. The transformation is already underway. It simply does not announce itself as a revolution until long after it has begun.

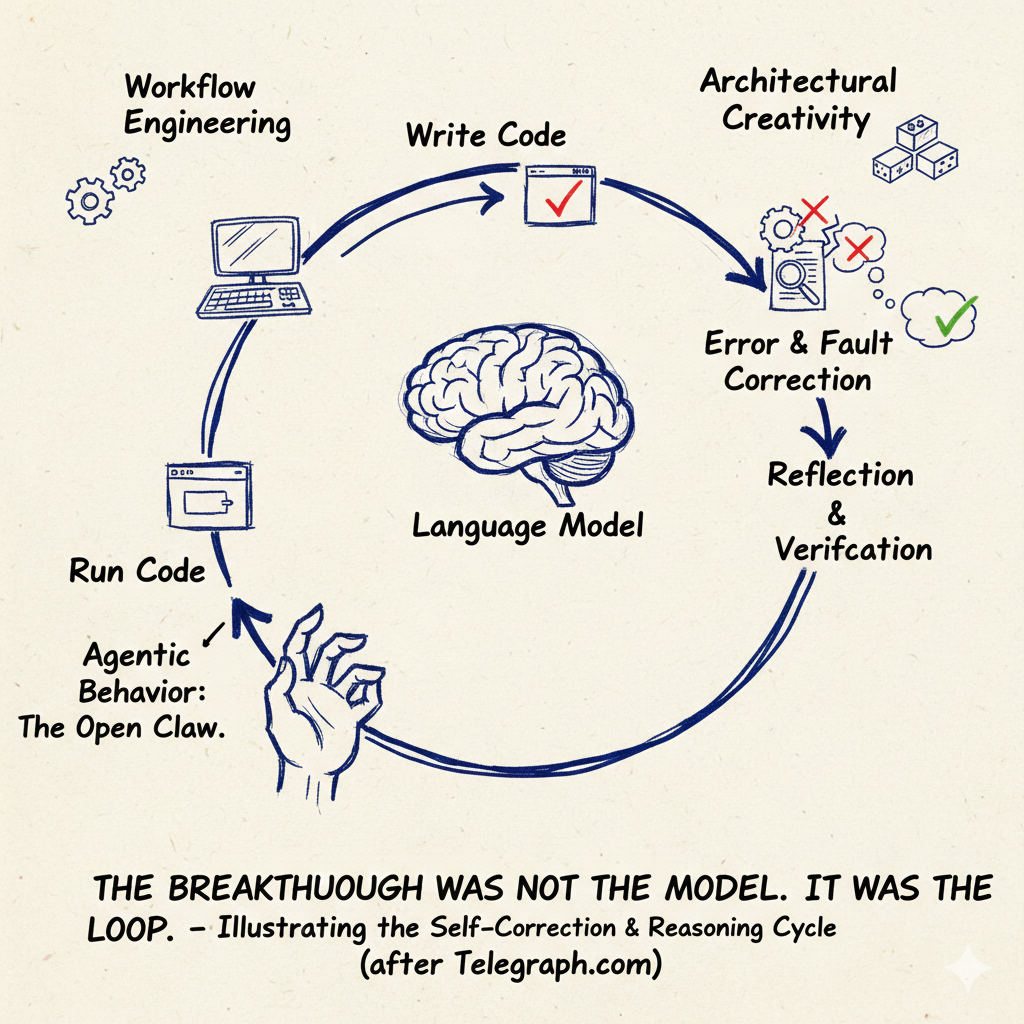

You might also like to read on Telegraph.com

Why Artificial Intelligence Is Breaking GDP and What Comes After

How cheap intelligence distorts measurement, hides value inside firms, and breaks old economic signals.

Who Gets to Train the AI That Will Rule Us

The upstream battle over models, data, and governance that decides who holds power in an AI society.

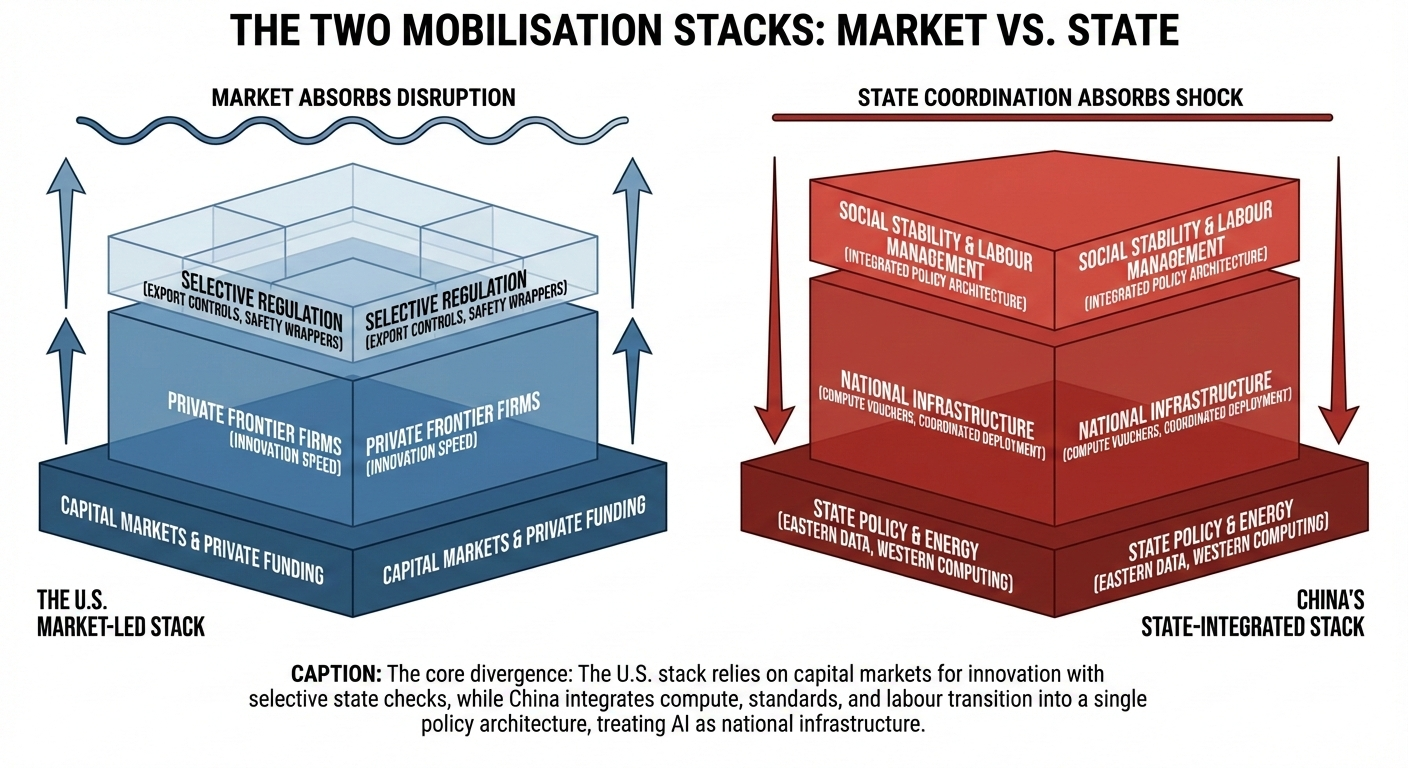

China Bets on Discipline in AI Race, as U.S. Rushes Toward General Intelligence

Why state capacity and enforcement may matter more than hype, speed, or venture theatre.

Britain’s Productivity Collapse And The Rentier Trap Martin Wolf Will Not Name

A systems argument about extraction, stagnation, and the institutional constraints that block renewal.

When Britain Turns Trust into a Weapon, It Cuts Its Own Throat

How credibility becomes a strategic asset — and what happens when it is spent as if it were disposable.