AI Is Making Cognition Cheap Faster Than Institutions Can Cope

Artificial intelligence is not primarily a productivity story. It is a pricing mismatch. Machine cognition is getting cheaper at a measurable rate, while wages, legal responsibility, and regulatory permission move on slower cycles and under different incentives. That gap is now the defining stress fracture in modern institutions.

Start with the simplest point that gets lost in the noise: institutions are not built to price cognition minute by minute. They price roles, grades, and licences. They price liability through insurance and precedent. They price permission through regulatory regimes designed for tail risk and public trust. Artificial intelligence is moving on a different clock.

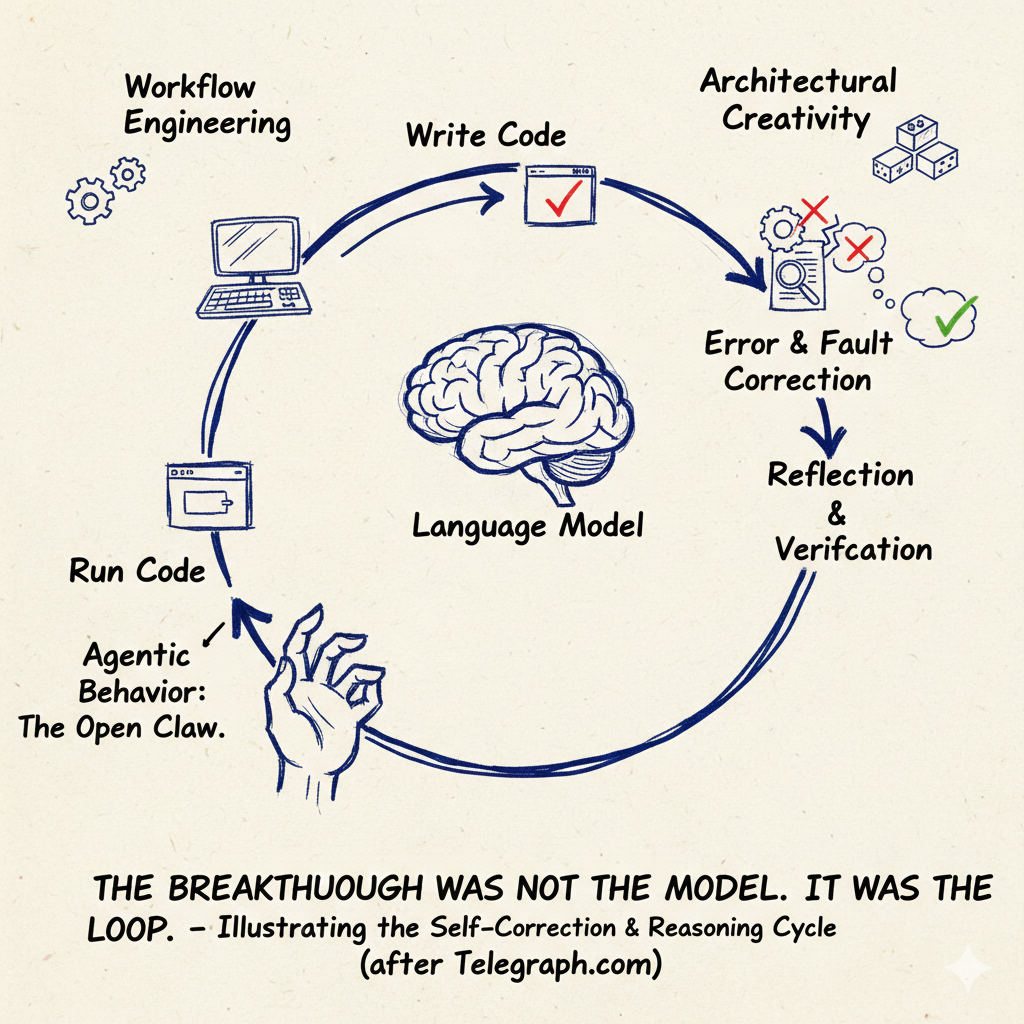

The result is not a single dramatic cliff. It is steady, compounding strain: tasks disappear before jobs do; responsibility concentrates upstream even as execution diffuses; compliance costs do not fall when model capability improves. In that world, the most important question is not whether AI works. It is whether the institutional pricing system can keep pace.

The cost of machine cognition is falling in public, measurable ways

It is easy to talk about intelligence getting cheaper in metaphors. It is harder to pin it to a number. The Stanford Institute for Human Centered AI did that work in the AI Index. In its research and development section, it records that the cost of querying a model at roughly GPT 3.5 level performance fell from $20 per million tokens in November 2022 to $0.07 per million tokens by October 2024.

“The cost of querying an AI model dropped from $20.00 per million tokens in November 2022 to just $0.07 per million tokens by October 2024.”

Source: Stanford HAI, AI Index 2025

Whatever one thinks about the broader hype cycle, this is a real input cost trend. It matters because it hits the economy where institutions are most sensitive: labour pricing. Labour markets can tolerate automation when automation remains a capital tool with noticeable marginal cost. They struggle when cognition becomes cheap enough to be treated as a commodity.

This is why Sam Altman, OpenAI’s chief executive, keeps returning to a single theme in public remarks: the marginal cost of intelligence is falling faster than most people intuitively expect. You do not need to endorse every forecast to accept the immediate point. Cost declines at this scale do not remain contained inside the AI sector. They spill into procurement, staffing, and organisational design decisions.

Liability does not deflate with cognition. It concentrates.

When cognition gets cheaper, the instinctive focus is job displacement. That is not where legal pressure lands. Legal pressure lands on responsibility: who is answerable when a decision harms someone, breaches a duty, or triggers a loss?

Modern legal systems do not assign blame to intelligence itself. They assign it to persons and entities. That is why the institutional response has been doctrinal rather than philosophical. The European Union’s revised Product Liability Directive makes the direction of travel explicit by treating software and AI systems as products for the purposes of strict liability.

“Software is a product for the purposes of applying no-fault liability.”

Source: Directive (EU) 2024/2853

The economic meaning is straightforward. As cognition gets cheaper, the legal cost of responsibility tends to rise because it is pulled upstream into formal accountability structures. Risk does not disappear. It becomes more concentrated and more carefully priced.

The United Kingdom’s automated vehicles framework illustrates the same impulse in a different domain. Each authorised automated vehicle must have an authorised self-driving entity that carries responsibility for how the system operates, even when no human is actively driving.

“Each authorised automated vehicle must have an authorised self-driving entity who will be responsible for the way that the vehicle drives.”

Source: UK Automated Vehicles Bill explanatory notes

Labour markets price roles, not tasks

The most durable insight in labour economics is that jobs are bundles of tasks. AI does not need to replace the bundle to change the market. It needs only to replace enough tasks to alter leverage, staffing ratios, and bargaining power.

The OECD has long emphasised task-based analysis to avoid crude automation forecasts. Its work on AI and employment shows that most occupations are only partially exposed to automation, but that task composition within jobs can change rapidly.

The International Labour Organization reaches a similar conclusion from a different angle. Its global studies on generative AI stress that exposure does not equal elimination. Tasks are reshaped first; roles follow slowly, if at all.

The practical outcome is already visible. Firms do not announce job replacement. They thin junior layers, compress teams, and demand output that once required more human time. Wages remain sticky because they compensate responsibility, experience, and legal exposure rather than raw cognitive throughput.

Why this is not simply another automation cycle

Earlier waves of automation changed how people worked but did not commoditise cognition at scale. What is different now is replicability. Once a model performs a cognitive task competently, it can be invoked repeatedly at near-zero marginal cost. Labour markets still pay for the person, the licence, and the liability burden. That mismatch is the source of today’s strain.

Regulation is designed for tail risk, not deployment speed

Regulatory systems are not slow by accident. They are slow by design. Their purpose is stability, public trust, and worst-case harm prevention. That design collides with a technology whose iteration cycle is measured in weeks and whose deployment is often global.

The National Institute of Standards and Technology frames AI governance as an organisational process involving documentation, oversight, and accountability. Those processes do not become cheaper because inference becomes cheaper.

“Documentation can enhance transparency, improve human review processes, and bolster accountability.”

Source: NIST AI Risk Management Framework

As model use expands, governance costs tend to rise, not fall, because the risk surface expands with it. Regulatory friction is therefore not a policy failure. It is an institutional constant.

When permission is expensive, activity routes around it

When compliance costs exceed perceived benefit, actors do not stop. They restructure. This pattern, known as regulatory arbitrage, is well documented in finance and technology. AI does not change the logic. It increases the payoff.

The route around is often internal and legal: private deployment before public permission, offshoring of certain functions, or reclassification of systems as tools rather than products. There is also a payments dimension. If execution and settlement move outside legacy rails, supervision becomes harder.

The Bank for International Settlements has repeatedly warned that new payment instruments complicate oversight precisely because they sit outside traditional intermediaries. The concern is not machine autonomy, but governance erosion.

The junior labour bottleneck institutions are not prepared for

The quiet failure mode is institutional reproduction. Entry-level roles exist to absorb low-risk tasks under supervision, allowing judgement to be trained before responsibility is assigned.

AI removes this layer first because it is document-heavy, repetitive, and easiest to substitute without altering formal accountability. Senior humans remain responsible, but the work that once trained the next generation is quietly automated away.

In the short term institutions appear more efficient. In the medium term pipelines thin. In the long term, a smaller cohort of credentialed professionals carries greater liability, supported by systems they did not learn without.

The institutional stress fracture in one line

AI makes cognition cheap. Institutions still price labour, responsibility, and permission as if cognition were scarce.

Conclusion

Artificial intelligence is not destabilising society because it thinks too well. It is destabilising institutions because it makes cognition cheap in systems that price work, responsibility, and permission slowly and in bulk.

Labour markets compensate roles, not tasks. Legal systems allocate blame, not efficiency. Regulators manage tail risk, not iteration speed. None of this changes simply because models improve.

The result is not immediate collapse, but mounting strain. Tasks disappear before roles do. Junior pipelines thin before employment falls. Liability concentrates upstream even as decision-making diffuses.

This is not a question of slowing intelligence. It is a question of re-pricing responsibility, training, and regulatory permission in a world where cognition is no longer scarce. Institutions that fail to do so will not be replaced by machines. They will become brittle, opaque, and increasingly difficult to govern.

More Telegraph.com reporting on AI

Telegraph.com has a growing collection of in depth analysis on artificial intelligence. To browse the full archive, visit our AI category here: https://telegraph.com/category/artificial-intelligence-ai/